Beyond Pixels : the virtualized rendering revolution, second episode.

From data processing to rendering every pixel, the graphics pipeline is a precise mechanism that brings interactive worlds to life, whether in video games, film productions, simulation or digital twins.

And just like in a dance, it’s the rhythm that makes the magic happen.

What happens when your virtual experience stutters? Often, it’s because a simple rule was broken: everything visible in your scene (logic, simulation, lighting, rendering, post-processing) must be completed in 16 milliseconds or less. Why? Because that’s the time budget per frame at 60 FPS. Miss it, and the illusion collapses.

At SKYREAL, our developers obsess over these 16 ms. It’s not just a performance target, it’s the heartbeat of real-time. And in this article, we explore how a modern 3D engine races against the clock to meet that deadline.

A constant race against time

A 3D engine is basically a super-fast courier service: the CPU writes postcards describing the world, the GPU turns them into images you can see, and both must finish in ≤ 16 ms

Malek Fardeau, Unreal Engine developer, from the poetic city of Marseille

Technical origin of the rule

A 60 Hz display refreshes its image 60 times per second.

This sets a frame time budget of 1 / 60 = 16.66… ms for all rendering operations (CPU + GPU).

If a frame takes longer than that, it results in stuttering or a noticeable drop in FPS.

(nb: 60 Hz displays became standard in the 1990s and were widely adopted in real-time graphics and video games starting in the early 2000s.)

Why 16 ms Matters Beyond Games

Architects, engineers, and designers now rely on real-time engines not just for visuals, but for decision-making. Real-time means:

- Instant feedback when testing designs

- Data fusion between CAD, BIM, lidar, sensors

- Immersive interactivity that clarifies complex systems

But all of this has to fit in a tiny window of time. And that’s where the magic and the challenge lie.

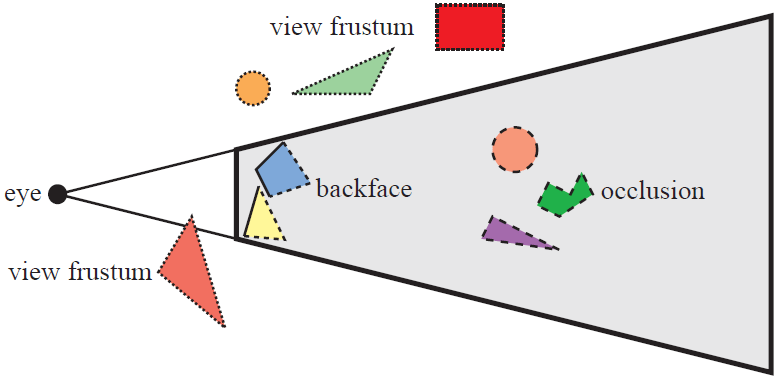

The foundation of optimization: Culling, the art of saying “No” to draw calls

A GPU that shades what the player can’t see wastes watts and milliseconds. Engines ruthlessly cull:

- Frustum Culling: discard objects outside the camera pyramid.

- Occlusion Culling: hierarchical Z‑buffers (HZB) or software raster bins test if something is hidden behind other geometry.

- Backface Culling: skip triangles facing away from the camera, no need to shade what can’t be seen.

The survivors are grouped by material to minimise costly GPU state changes. In DirectX 12 and Vulkan this results in fewer commands sent to the GPU queue.

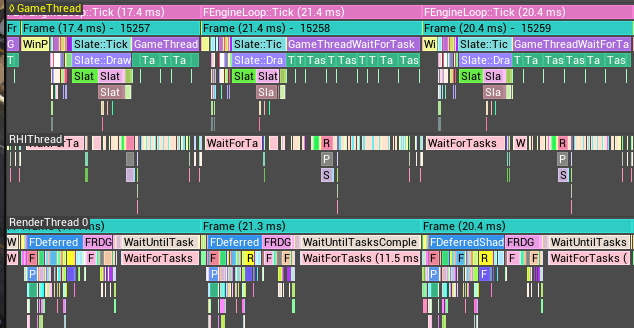

Monothread and Multithread Frame Pipeline : Fitting a Universe in 16 ms

Until around 2010, the traditional monothreaded frame pipeline required each task to be completed sequentially within the 16 ms budget.

And to stay within budget, every subsystem must deliver its part quickly and efficiently:

| Stage | Budget (60 FPS) |

| CPU + Culling | ≤ 4 ms |

| Geometry + Raster | ≤ 3 ms |

| Pixel Shading | ≤ 4 ms |

| Post-Processing + UI | ≤ 3 ms |

| Frame Total | ≤ 16 ms |

Since 2010, the widespread adoption of multithreading has allowed modern engines to distribute workloads across multiple CPU cores. This parallelism enables tasks to run concurrently, greatly reducing CPU time per frame. Consequently, more complex scenes and advanced effects can be handled efficiently, often bringing frame times below 16 ms.

Going below 16 ms per frame allows to support higher frame rates (like the new 90 FPS standard in video games) and incorporate advanced visual effects such as ray-traced reflections without compromising the experience.

Micro-Case Study: Opening a Door

Let’s take a simple action such as opening a door in a 3D scene. Here is a simplified version of what’s happening inside a 16 ms window:

- CPU computes the door animation.

- Culling defines what you will see from your point of view.

- Shaders compute colors, textures, lighting and shadows (geometry shader, pixel shader, vertex shader…).

- Post-processing adds motion blur, antialiasing, tonemapping, …

- GPU displays computed image on screen.

That’s millions of operations hidden under one smooth motion.

16 ms both a constraint and a design mindset

Modern engines like Unreal Engine 5 turn the old rendering pipeline upside down. Instead of rigid, predefined steps, we now have virtualized, dynamic systems that only spend resources on what the user actually sees.

This shift is especially clear with features like:

- Nanite: eliminates draw calls by streaming per-cluster geometry.

- Lumen: delivers global illumination with a smart hybrid of screen-space and ray-traced methods.

- Bindless rendering: removes texture/material limits, giving artists freedom without performance penalties.

The engine isn’t just processing; it’s prioritizing, compressing, and adapting; all in real time.

The Takeaway

As Malek likes to say:

“How much can you do in just 16 milliseconds? Then do it again, and again, and again.”

At SKYREAL, we’re not just optimizing render pipelines. We’re building real-time experiences where performance is the platform, and those 16 ms are our canvas.